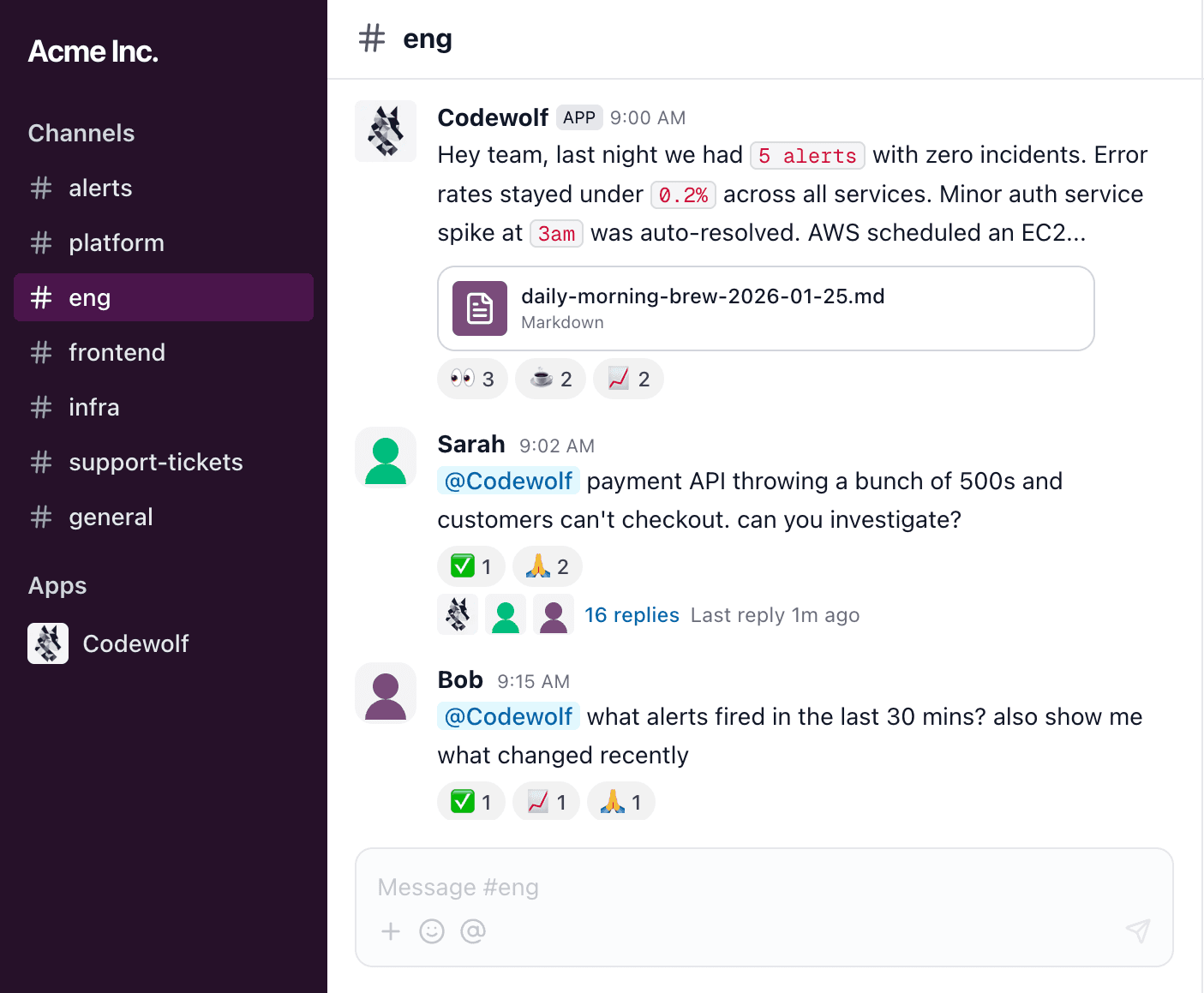

Production that runs itself

AI agents that work while you sleep, investigate, fix, prevent, optimize, and understand —

directly in Slack.

What Codewolf agents do

Investigate

Tag @Codewolf in Slack when issues strike. AI agents analyze deployments, correlate logs across services, trace requests, and identify root causes. From alert to diagnosis in minutes.

Elevated error rate on checkout (>5%), Current Value: 12.8%, Threshold: 5%

error_rate(checkout-service, env:prod) > 5%@Codewolf can you investigate this alert?

On it Sarah! Assigning agents to investigate the checkout error rate:

I'll report back with findings shortly.

Here's what I already know from recent events:

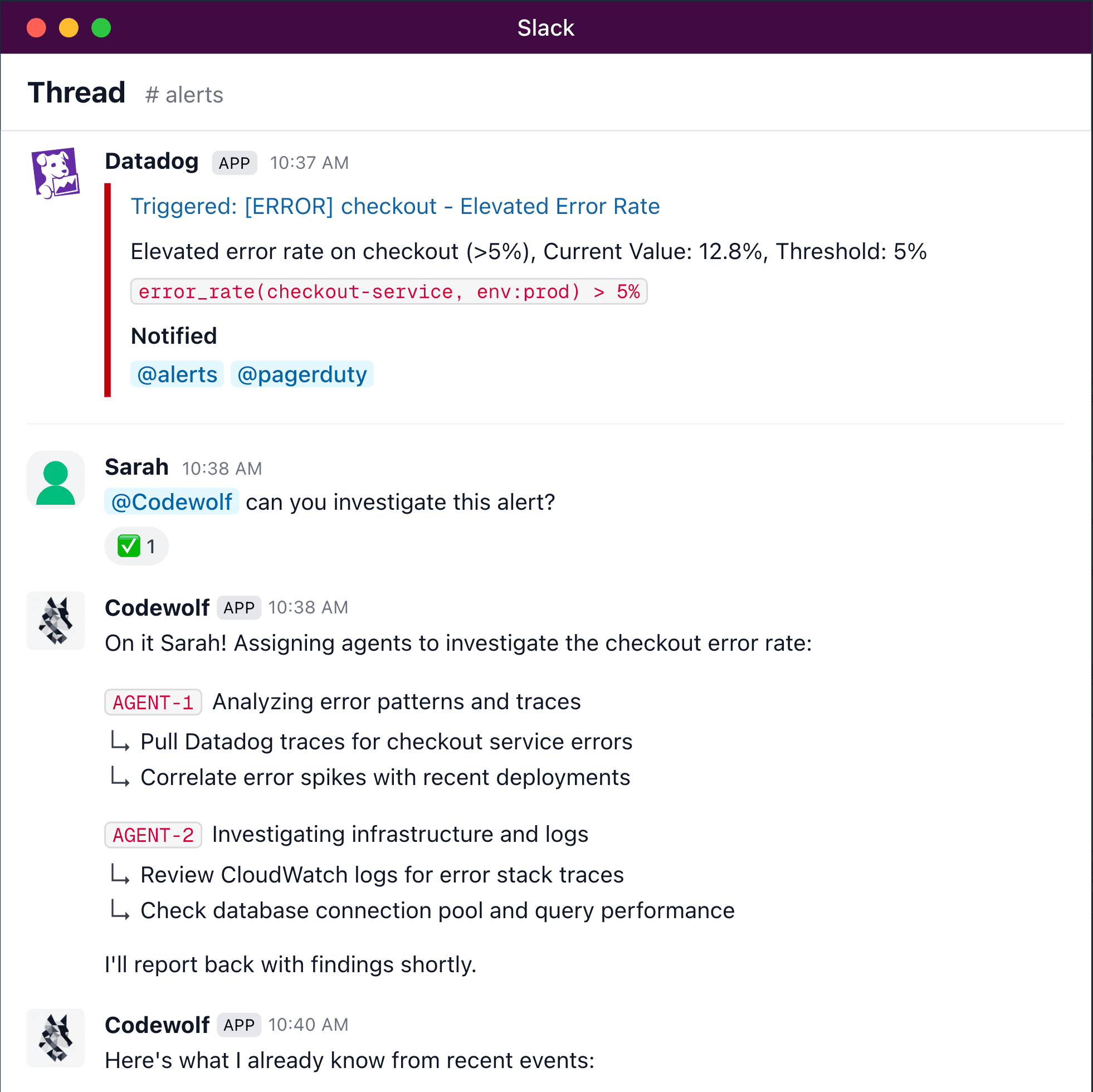

Investigation Complete

🔴 Checkout service confirmed DOWN — returning 504 Gateway Timeout

1️⃣ Root Cause Analysis: Backend service failures cascading to frontend — 92% confidence

Evidence:

- flagd feature flag service — resolveBoolean operations failing (14 error traces)

- product-reviews service — OpenAI gpt-4o-mini API calls failing (5 error traces)

- frontend — getServerSideProps / failing when calling downstream services (12 error traces)

- frontend-proxy — 44 error traces, ~4.3% error rate

Error Propagation Chain:

↓

product-reviews → OpenAI API ❌

↓

frontend (getServerSideProps /) ❌

↓

frontend-proxy → 504 Gateway Timeout

Affected Pods:

- frontend-proxy-b6fb5b44f-5wzr9

- frontend-78cf8ff86d-pxvfr

- flagd-7bd55d47bb-fmcqm

- product-reviews-cdc7c8c5f-mpmhk

Recommended Actions:

- 1️⃣ Immediate: Check flagd pod health — feature flag failures are affecting multiple services

- 2️⃣ Immediate: Verify OpenAI API key/quota for product-reviews service

- 3️⃣ Consider restarting flagd if it's in a bad state

Would you like me to dig deeper into the flagd or product-reviews failures?

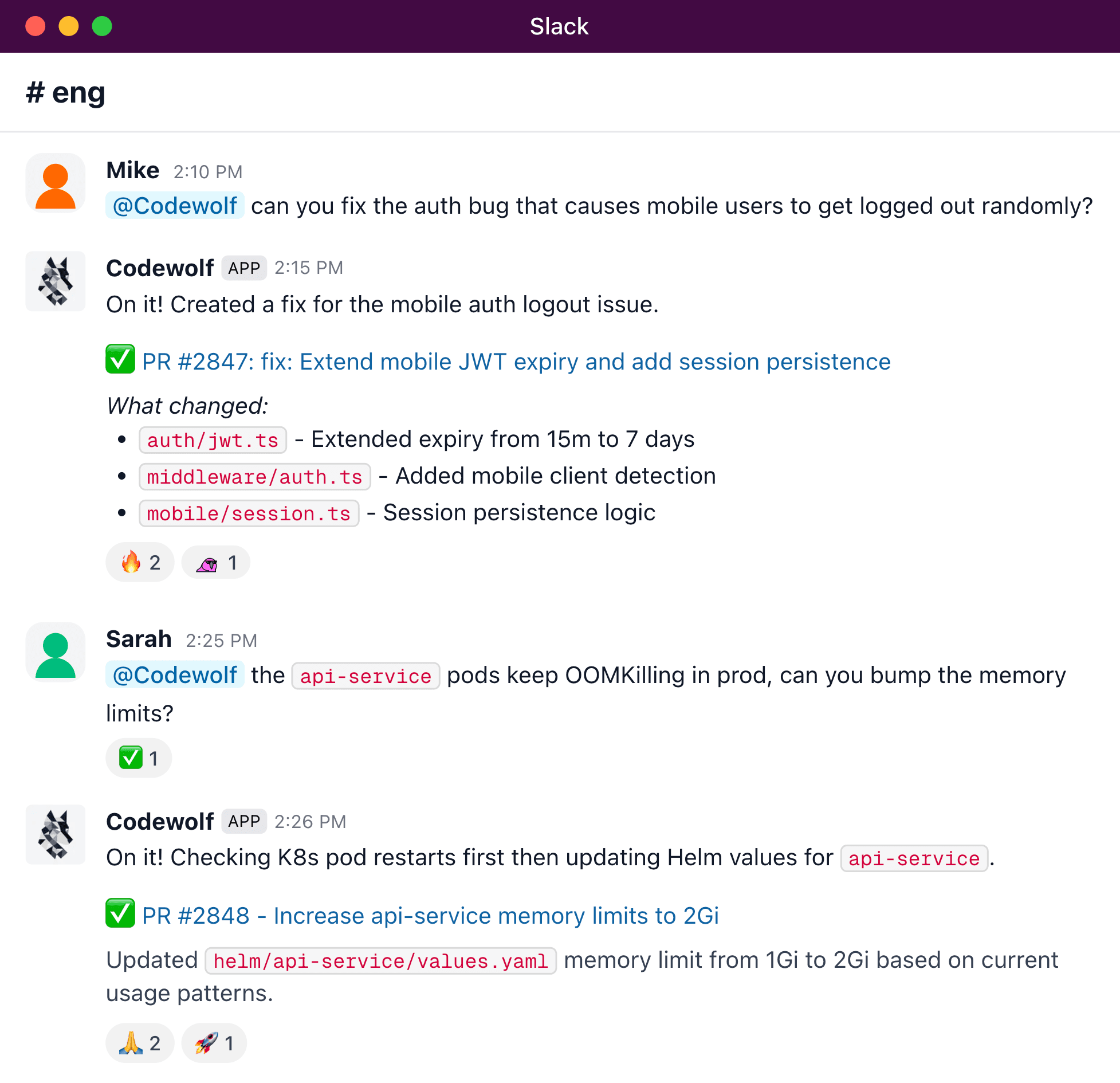

@Codewolf can you fix the auth bug that causes mobile users to get logged out randomly?

On it! Created a fix for the mobile auth logout issue.

- auth/jwt.ts - Extended expiry from 15m to 7 days

- middleware/auth.ts - Added mobile client detection

- mobile/session.ts - Session persistence logic

1

1@Codewolf the api-service pods keep OOMKilling in prod, can you bump the memory limits?

On it! Checking K8s pod restarts first then updating Helm values for api-service.

✅ PR #2848 - Increase api-service memory limits to 2Gi

Updated helm/api-service/values.yaml memory limit from 1Gi to 2Gi based on current usage patterns.

@Codewolf can you update the checkout-service to use the new payment-gateway-v2 endpoint? need to update all the downstream dependencies too

On it! Updating service endpoints and dependencies.

✅ PR #2849 - Migrate checkout-service to payment-gateway-v2

Updated checkout-service config to use v2 endpoint, modified order-service and notification-service to handle new webhook format, added feature flag for gradual rollout, and updated Kubernetes service mesh routes.

Fix

AI agents don't just find problems, they fix them. With full production context and live telemetry, agents generate robust PRs with code patches and configuration changes that resolve issues fast and correctly.

Prevent

Catch issues before they reach production. AI agents review PRs for risks and run post-deployment checks. Stop problems before they become incidents.

PR #2891 opened by alex: Add transaction status column to payment-service

Reviewed this PR and found potential production impact

- Migration adds non-nullable column to transactions table (847M rows)

- Will lock table during backfill, estimated 12-15 minutes

- Could cause payment processing downtime during peak hours

View full review on GitHub

frontend-service v2.4.1 deployed to production • View commit

On it! I see frontend-service v2.4.1 just deployed. Running post-deployment checks:

Will report back in 15 minutes if I see anything suspicious.

PR #2903 opened by jordan: Scale RDS instance for production database

Reviewed Terraform changes and found infrastructure impact

- Scales RDS from db.r6g.xlarge → db.r6g.2xlarge

- Cost increase: ~$620/month additional AWS spend

- Requires 3-5 minute downtime window for instance modification

- Recommend scheduling during maintenance window (Sunday 2-4 AM UTC)

View full analysis on GitHub

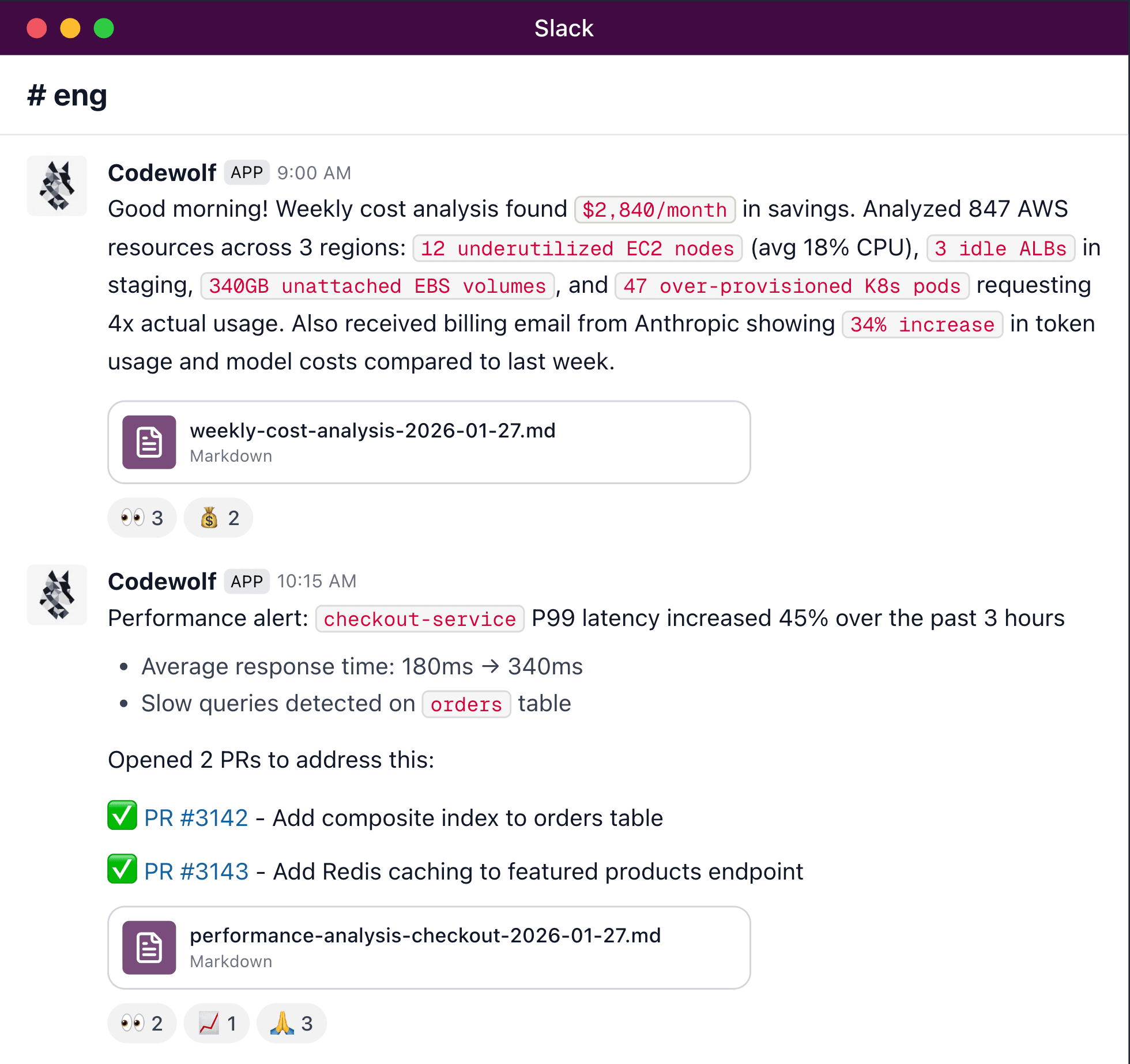

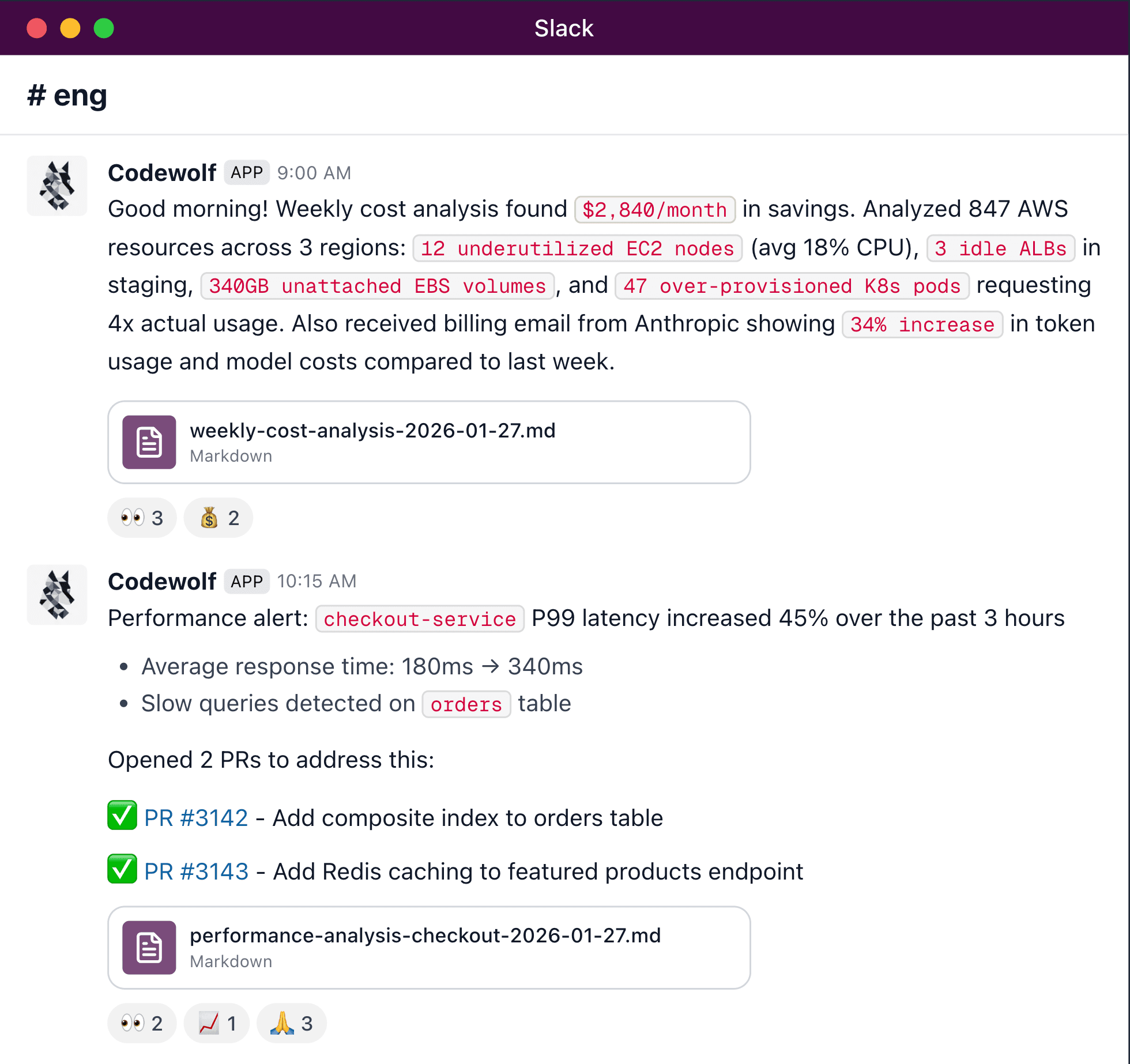

Good morning! Weekly cost analysis found $2,840/month in savings. Analyzed 847 AWS resources across 3 regions: 12 underutilized EC2 nodes (avg 18% CPU), 3 idle ALBs in staging, 340GB unattached EBS volumes, and 47 over-provisioned K8s pods requesting 4x actual usage. Also received billing email from Anthropic showing 34% increase in token usage and model costs compared to last week.

Performance alert: checkout-service P99 latency increased 45% over the past 3 hours

- Average response time: 180ms → 340ms

- Slow queries detected on orders table

Opened 2 PRs to address this:

✅ PR #3142 - Add composite index to orders table

✅ PR #3143 - Add Redis caching to featured products endpoint

Daily traffic trends from PostHog: 12.4K unique visitors (↑18% vs yesterday). /pricing page load time increased to 2.8s (was 1.4s) affecting 34% bounce rate. Product signup conversion dropped from 8.2% to 6.1% after yesterday's checkout UI update.

Optimize

Continuously improve your infrastructure. AI agents analyze costs, identify resource waste, recommend performance improvements, and track efficiency trends. Get daily insights without manual analysis.

Understand

Codewolf AI agents understand your entire system. Not just your codebase, but production behavior, infrastructure, service dependencies, deployment patterns, and system relationships. This deep context enables smarter investigations, better fixes, and proactive prevention.

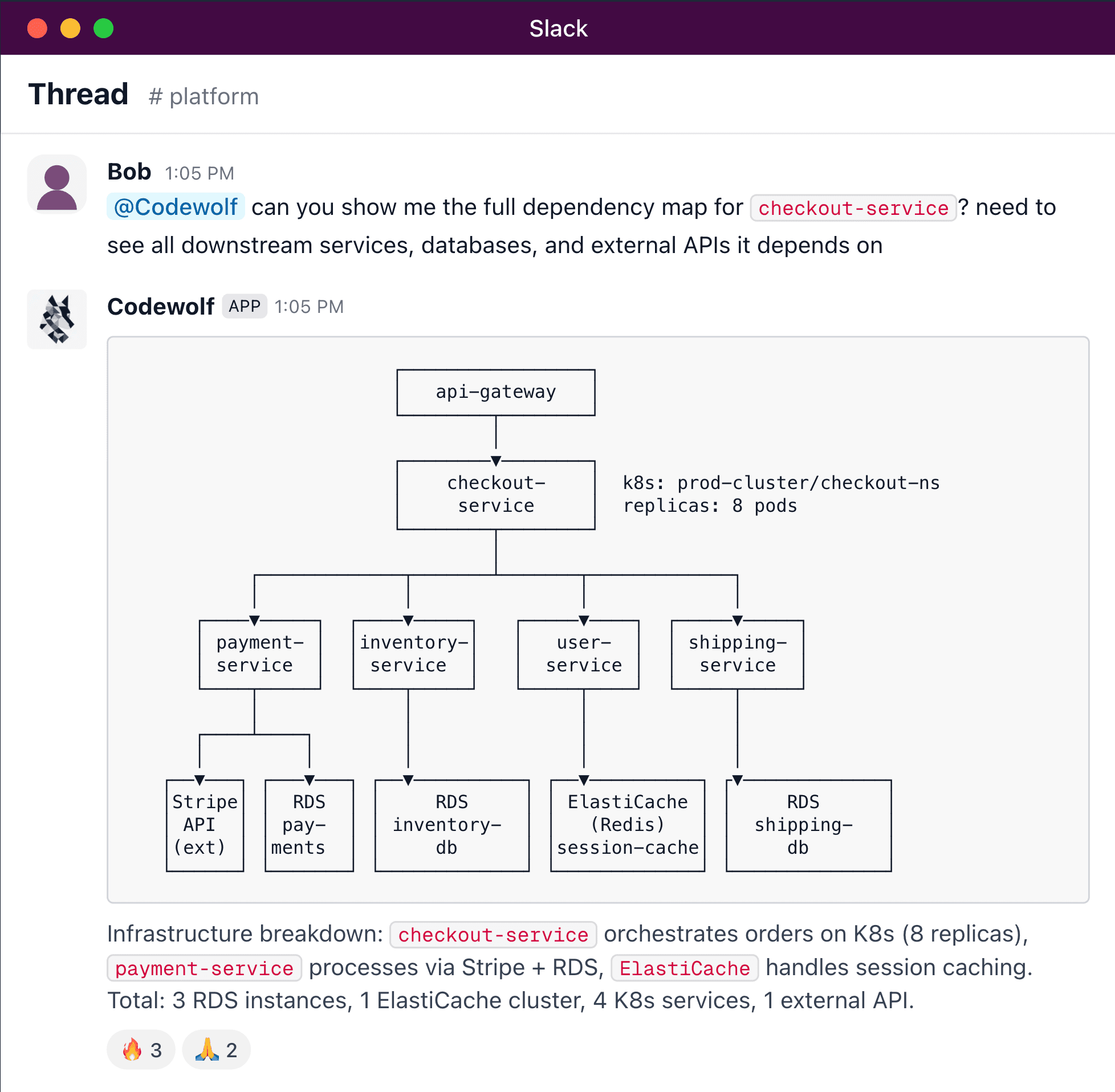

@Codewolf can you show me the full dependency map for checkout-service? need to see all downstream services, databases, and external APIs it depends on

┌─────────────────┐

│ api-gateway │

└────────┬────────┘

│

┌────────▼────────┐

│ checkout- │ k8s: prod-cluster/checkout-ns

│ service │ replicas: 8 pods

└────────┬────────┘

│

┌─────────────┬───────┴───────┬─────────────┐

│ │ │ │

┌────▼─────┐ ┌────▼─────┐ ┌─────▼────┐ ┌─────▼─────┐

│ payment- │ │inventory-│ │ user- │ │ shipping- │

│ service │ │ service │ │ service │ │ service │

└────┬─────┘ └────┬─────┘ └─────┬────┘ └─────┬─────┘

│ │ │ │

┌────┴────┐ │ │ │

│ │ │ │ │

┌──▼───┐ ┌───▼───┐ ┌──▼──────────┐ ┌──▼──────────┐ ┌▼─────────────┐

│Stripe│ │ RDS │ │ RDS │ │ ElastiCache │ │ RDS │

│ API │ │ pay- │ │ inventory- │ │ (Redis) │ │ shipping- │

│(ext) │ │ments │ │ db │ │session-cache│ │ db │

└──────┘ └───────┘ └─────────────┘ └─────────────┘ └──────────────┘Infrastructure breakdown: checkout-service orchestrates orders on K8s (8 replicas), payment-service processes via Stripe + RDS, ElastiCache handles session caching. Total: 3 RDS instances, 1 ElastiCache cluster, 4 K8s services, 1 external API.

SOC 2 TYPE II CERTIFIED

Enterprise Security

Your data is never combined with others. Read-only access to your integrations with full audit logs. Your data never used for model training.

Agents run in dedicated sandboxes

Data encrypted in transit and at rest

Enterprise authentication support

Granular permissions and audit logs

Frequently asked questions

Pricing

Start with included credits. Scale as you need with pay-as-you-go.

Growth

For growing teams shipping fast

- 200 Included Agent Unit Credits

- Dedicated agent workspaces

- Unlimited users

- Standard support

Enterprise

Custom solutions for large organizations

- Custom integrations

- SSO/SAML

- Dedicated support

Careers

We're replacing engineering toil with AI agents. Join us to build the future of how engineering teams work.

Join our pack